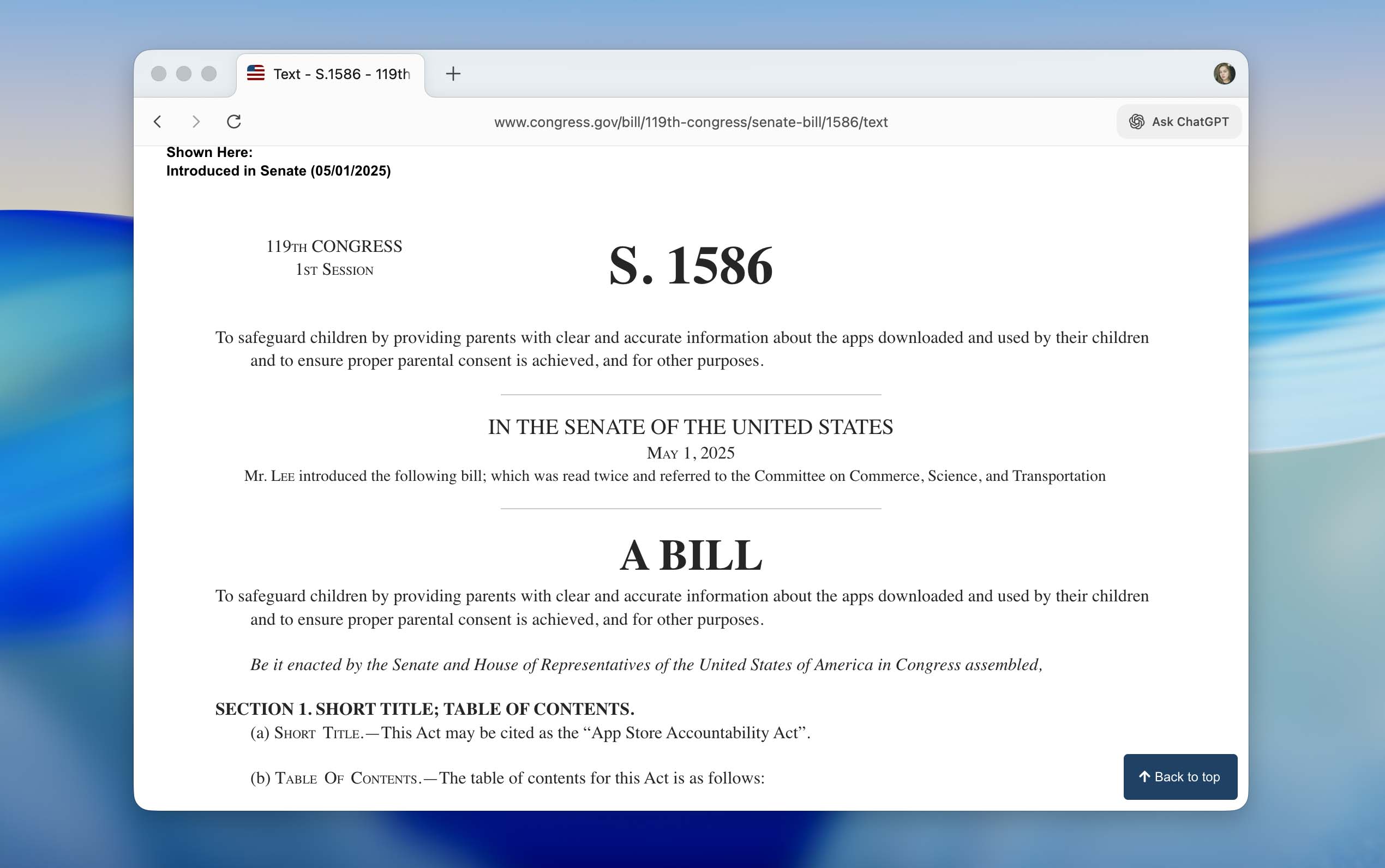

This year does not treat Apple gently. The company faces regulatory pressure in the EU, tough questions in the U.S. and a steady stream of proposals that aim to rewrite the rules of the App Store. And yesterday, on December 10, 2025, CEO Tim Cook arrived in Washington, where he met with members of the U.S. House of Representatives to discuss possible changes to the App Store Accountability Act (lawmakers now debate rules that may force Apple and Google’s Play Store to verify user ages and rethink the entire app-download process for children).

Table of Contents

What Is the App Store Accountability Act and What Does It Propose?

For those who do not follow every update in U.S. tech regulation, we add a short explanation of the bill and its purpose.

The App Store Accountability Act tries to create national standards for how apps treat young users. Legislators argue today’s setup puts most of the burden on moms and dads, while tech companies do next to nothing. So, what does the bill actually demand? In simplified form, it introduces several key requirements:

- Mandatory age verification for every new account in an app store. Apple and Google must confirm the user’s age before the account receives permission to download apps.

- Verified parental consent for every minor. If a child wants to install an app, a parent must approve it (every single time).

- Mandatory linking of child accounts to a parent account for continuous oversight. Apple’s Family Sharing already follows a similar idea, but the bill imposes this as a strict federal obligation.

- Platform-level responsibility for age-restricted content. If a minor reaches an app with adult or harmful material, the platform may face consequences, even if the app developer fails to enforce its own rules.

- Collection of identifying information when age checks take place. This part raises the loudest alarms, because lawmakers expect “commercially reliable methods,” while Apple warns that the request may force the company to store documents that users normally would never hand to a tech giant.

If Everything Looks Reasonable, Then Why Does Tim Cook Appear in Washington?

On the surface, the rules in that new law look fine. Politicians say sites should check how old users are, alert moms and dads, yet keep kids away from sketchy stuff. No big shock there. If you’ve seen your eight-year-old download a gory game rated for teens, you’d likely agree it’s needed. Then again, why’s Tim Cook so worked up? Why hop on a plane to D.C., rather than just reply with a quick message saying “sure thing”?

The answer hides in the fine print. The bill does not simply assign Apple a role in child safety. It transfers responsibility and guilt straight to the platform. If an app fails to filter adult content for a minor, lawmakers may still blame Apple because the App Store “allowed” that child to download the app in the first place. The developer may fail its duty, but Apple may carry the penalty. Cook sees this risk and refuses to accept a scenario where Apple verifies ages, builds tools, enforces rules and still answers for every slip by Meta, TikTok or any other service.

Another issue concerns data collection. Lawmakers insist on “commercially reliable” age verification. Meta, Google and others push the idea because it moves the burden to Apple. If the App Store performs the checks, everyone else enjoys a comfortable excuse: “The child passed Apple’s gate, so the system works.” Apple, however, sees a trap. To satisfy the proposed standard, the company may need to gather documents such as IDs or birth certificates from millions of users. Tim Cook understands that this request contradicts Apple’s long-public position on privacy. Apple builds an entire brand on the idea that it avoids storing sensitive data, not expands its collection.

So Cook arrives in Washington to negotiate. He does not oppose the protection of minors. He opposes a framework that assigns Apple the blame for the failures of others and forces the company to create a national archive of personal documents.

And What Do Ordinary Users Think About This Law?

When we prepared this post, we assumed parents would flood comment sections with strong support for the bill and insist that lawmakers must approve it without a single correction. We prepared for the classic “finally, someone fixes the internet” energy. Instead, the opposite greeted us.

Most users take Tim Cook’s side. They describe the idea of shifting child-raising duties from families to tech giants as odd at best and irresponsible at worst. According to them, a parent must define what a child may use, not Apple, not Google. People argue that parents may explain which apps fit their children’s age and which apps do not. They also point out that Meta and other platforms must police the content inside their services instead of waiting for Apple to wear the sheriff badge.

Final Thoughts

The situation now moves into an interesting phase. Our team hopes Tim Cook convinces the authorities and secures changes to the bill. We value child safety, but we also value privacy. We do not feel excited about the idea of handing passport-level data to any platform, even if that platform carries a shiny Apple logo.

As for the bill itself, its logic still raises questions. Today’s social feeds often jump from a clip about a new toy to a story about a violent crime within seconds. Who answers for that? Meta hosts the posts, pushes them with algorithms, and fails to filter them. Yet the bill points at Apple simply because the App Store allowed the installation of the app. We see no logic in this chain. Apple maintains the storefront, not the inside of every service. The responsibility must stay with the platform that displays the content, not with the company that sells the app icon.

So we wait for the final results of Cook’s visit. The outcome may set the rules for years ahead, and it may determine how app downloads work for families across the country. We wish Tim Cook success in these negotiations.