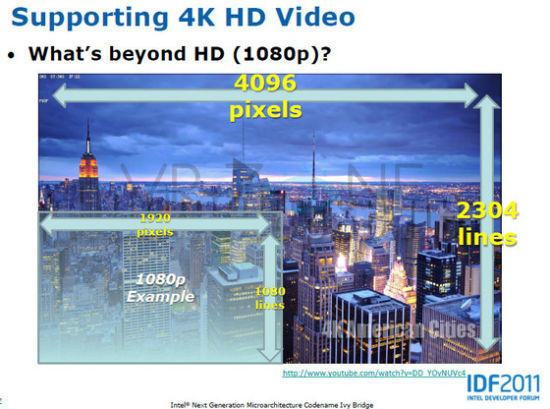

Intel announced last week at its Intel Developer Conference in San Francisco that its next generation chipset, dubbed “Ivy Bridge,” will support graphics resolutions of up to 4096 x 4096 on a single monitor. If that sounds incredibly high, it’s because it is. What currently is the standard for ‘high-definition’ tops out at 1920 x 1080, the resolution that is delivered via Blu-Ray discs and video game consoles like the PS3 and the Xbox 360. Known as 4K resolution, 4096 pixel wide displays are the next plateau for high definition. Already cameras such as the Red ONE are capable of shooting 4K video, even though relatively few can view or display this resolution natively.

Intel announced last week at its Intel Developer Conference in San Francisco that its next generation chipset, dubbed “Ivy Bridge,” will support graphics resolutions of up to 4096 x 4096 on a single monitor. If that sounds incredibly high, it’s because it is. What currently is the standard for ‘high-definition’ tops out at 1920 x 1080, the resolution that is delivered via Blu-Ray discs and video game consoles like the PS3 and the Xbox 360. Known as 4K resolution, 4096 pixel wide displays are the next plateau for high definition. Already cameras such as the Red ONE are capable of shooting 4K video, even though relatively few can view or display this resolution natively.

Intel is hoping to change that. According to VR-Zone, Intel made the announcement that the “Ivy Bridge” chipset, successor to the current “Sandy Bridge” chipset utilized by Apple, will support 4K resolutions with the integrated GPU. This means that it would be possible perhaps for even MacBooks to display 4K content. Apple introduced the term “Retina display” to refer to the high resolution display found on the iPhone 4, so high that at normal viewing distance the human eye is incapable of making out individual pixels. Potentially, a 4K display in something like a 27-inch iMac or 27-inch Thunderbolt Display would meet the criteria for a “Retina display” as well.

“Ivy Bridge” is expected to improve graphics performance by double, or more in some cases, over “Sandy Bridge”. It will also support DirectX 11 and Apple’s Open CL.

I have long hoped that 4K would become the next standard for so called high-def, and would much rather have 4K than what is currently being marketed to me as the “next big thing,” 3D. Watching movies in 3D just gives me a headache and/or motion sickness. Let me see a film or play a game that is so sharp and clear that it’s like looking out the window, and my mind will make up the 3D part of it just fine, as it always has done.

Via: AppleInsider

Source: VR-Zone